So SEO, as everyone knows, is pretty important and if your website is not SEO ready then the possibility of ranking higher or even being present on the search engines is near difficult.

Let me give you some of the major audits you should do whenever conducting your very first SEO Audit but before that, you need certain tools to do the same.

Which are:

- Google Analytics.

- Google Search Console.

- Ahrefs.

- Screaming Frog.

- Copyscape.

- Title Tag Pixel Width Checker.

Once you have access to above-said tools which is limited, you can still get more relevant tools as per your choice or resources you have, let us start with a thorough website SEO audit.

1. Website Crawl:

So use Screaming Frog for this step. Put in your website URL in Screaming Frog tool which will start crawling your website each and every URL to track all issues like redirections 301, 302, 404 pages, 5xx server errors, duplicate pages, duplicate title pages, URL errors, content headings lengths etc.

So here check every section and download the report to correct them.

Know this, with the free version of screaming frog you can track 500 URLs only. In case you want to track more than take their paid versions.

2. Verify the domain versions which should be one only:

So there are instances when the website is created it could have various versions or when someone types in the browser they can do so as below

- http://yourdomain.com

- http://www.yourdomain.com

- https://yourdomain.com

- https://www.yourdomain.com

So whatever they click they should and must land to one version only, which you want to target so I would say its http://www.yourdomain.com. Keep it unique. If required add 301 redirection or canonical tags for this domain.

3. Page Titles and Content:

Go to the view page source on the home page by right clicking and selecting view page source. Once there check the following

Title : Optimize a title as per your keyword you want to focus on that page. Keep it below 55 characters. Beyond that Google will encrypt it or let's say not show the same in search.

Description : Keep it keyword optimized snippet. For the page write proper snippet which enables the user to click your page. Keep it below 150 characters.

Header Tags : The H1 tag is still an important on-page ranking factor.

We want to make sure that every page on the site has a unique, descriptive, H1 tag.

For the home page, you will generally want to:

- Communicate the site’s main purpose

- Include 1 or 2 high-level keywords in the process

Here is the current H1 tag:

Compare Life Insurance Quotes Within 1 Minute:

Thats looking great ain't it.

Now Check other Sub Headers(H2, H3's)

H2, H3's are other headings in any given page. They are sub headings. Here too you can focus on your keywords and follow the same rule as H1's

Mind this, there can be only one H1. Though other H1's you can add but keep it not visible to Google as it would else confuse the crawlers about the page intent.

4. Content Uniqueness:

"Content is King" this words should always resonate whenever you are auditing a website or adding new contents. So here use Copyscape tool to check if the website content is unique and free of any plagiarism. Just copy the page content and paste it in the text section of Copyscape. It will show you if it's unique and if not from where it has been copied. Also, it will track internal copies if any which means if the same content is present across the website internally.

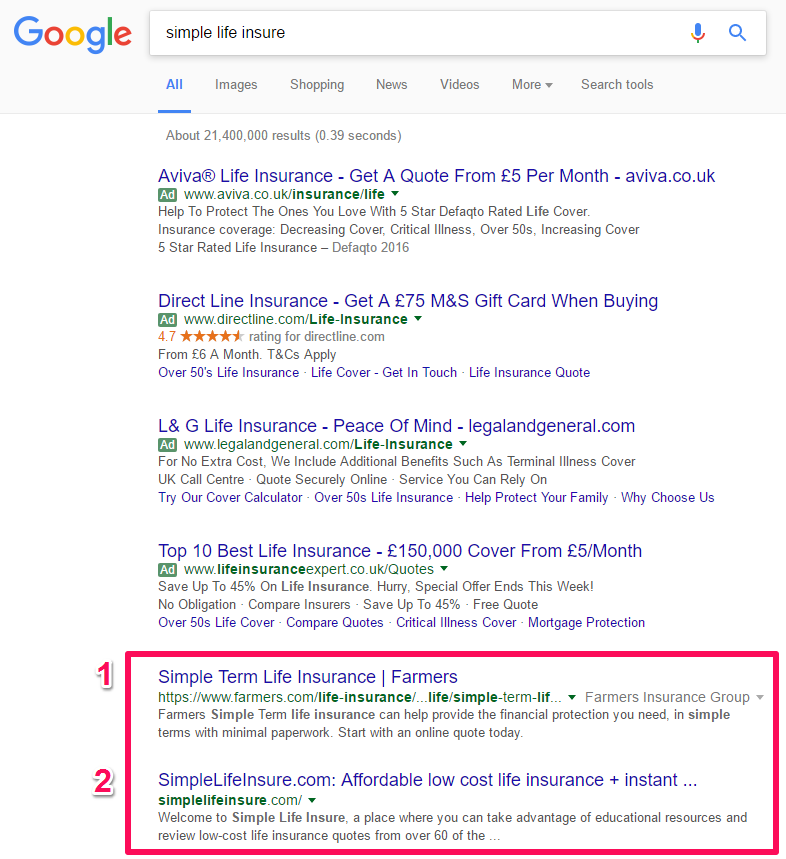

5. Do a Google Search:

Now, let's move over to google search bar. So put your domain and search. There are two inferences you will come up with.

1. If your website is a new or old brand, it should appear first in search. Nothing to worry.

2. If your domain isn't there in the 1 spot, there might be some concerns related to algorithmic or manual penalties.

So below you can see by typing simple life insurance, the company shows up in the 2nd spot which is weird but not to worry as it might be a pretty new brand and Google has not yet verified it. But in any ideal condition, you should be No 1 spot with your brand name.

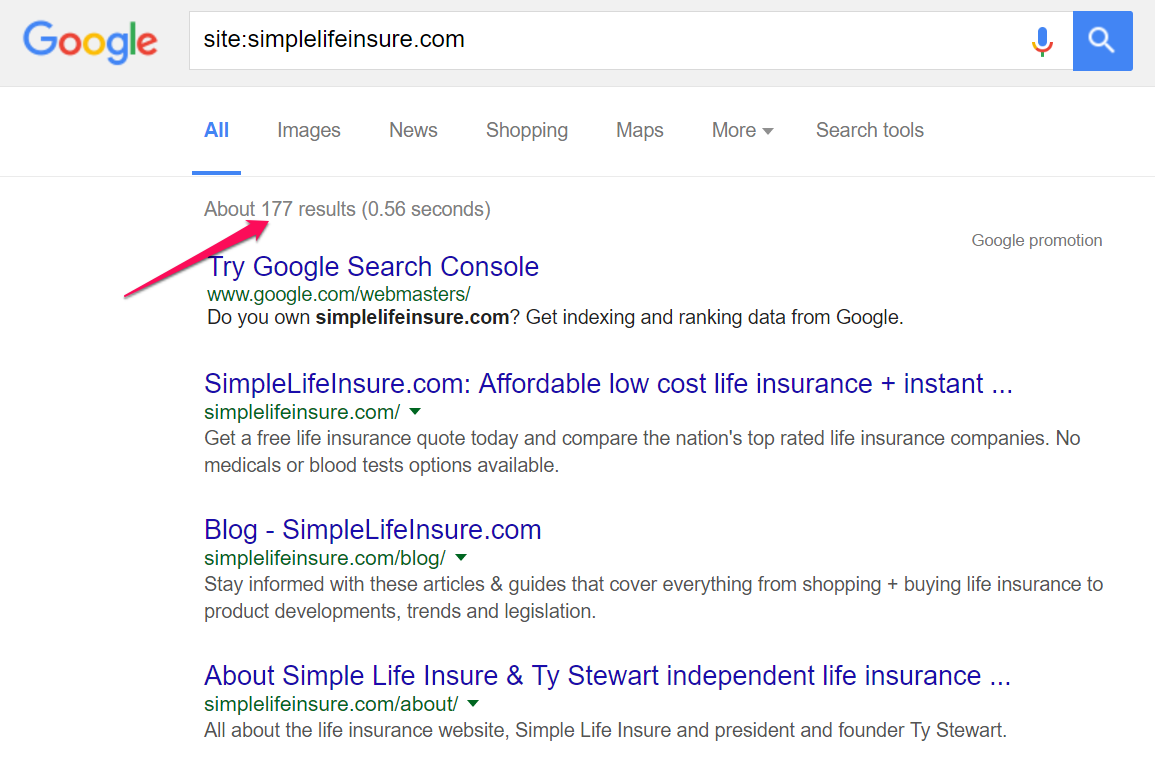

Do a site: operator search

Next, we’ll perform a search using the site: operator, which will show us how many pages Google currently has indexed for the domain.

This can be a good early indicator of indexing issues.

The format for the search is “site:yourdomain.com” without the quotes.

We can see that there are currently 177 pages included in the index for this domain.

That seems a little high considering our crawl only discovered 92 URLs.

If we scroll down we can see there are quite a few "junk" pages that we'll want to get rid of.

So it's a very good way to audit every page and its strength considering content and other stuff.

Image Source: Ahrefs.com

6. Google Analytics:

Now move on to GA for understanding the traffic flow to your page. If not installed then its the primary task of being an SEO guy. Start to understand various sources your traffic comes to each page of your website.

Organic : Traffic through search.

Referral : From referral sites like Quora etc.

Social : Facebook, Reddit, Twitter traffic.

Paid : If you are running any paid activities, that traffic.

Others

So understand each mode of traffic and build on managing those pages.

7. Google Search Console:

GSC as we know it is a very powerful tool in many ways. Here you can track following easily and manage them.

Crawl Errors: We can see that Google is reporting a number of 404 (not found) errors, and several 403 (access denied) errors. So get on it and try to redirect all non-existent pages using 301 redirections.

Search Traffic: Get to know your website internal linkings, external links coming to you, Any manual actions by Google etc

HTML Improvements: Check out issues related to the pages on your website which are Meta descriptions, length, duplicity etc. Titles, lengths, duplicity etc.

These are major audits you can do with GSC.

7. Accessibility:

By accessibility I mean is how best your website can be accessed by Google crawlers. So few things you need to check:

A. Robots.txt:

The robots.txt file is used to restrict search engine crawlers from accessing sections of your website. Although the file is very useful, it's also an easy way to inadvertently block crawlers.

As an extreme example, the following robots.txt entry restricts all crawlers from accessing any part of your site:

Manually check the robots.txt file, and make sure it's not restricting access to important sections of your site. You can also use your Google Webmaster Tools account to identify URLs that are being blocked by the file.

B. Robots Meta Tags:

The robots meta tag is used to tell search engine crawlers if they are allowed to index a specific page and follow its links.

When analyzing your site's accessibility, you want to identify pages that are inadvertently blocking crawlers. Here is an example of a robots meta tag that prevents crawlers from indexing a page and following its links:

C. XML Sitemap:

Your site's XML Sitemap provides a roadmap for search engine crawlers to ensure they can easily find all of your site's pages.

D. Page Speed:

Check if the page speed is optimal. It's always advisable to have a page load time of .2 secs. Users have a very limited attention span, and if your site takes too long to load, they will leave.

You can use Pingdom.com and Google Page Speed Insight for that purpose.

So these are some of the major SEO audits which you can conduct. Apart from that, there is other additional stuff you can do based on your specific requirements.

0

0